In the buzz around AI, let’s not ignore the role of data for developing AI we can trust, says one Notre Dame computational scientist.

Two years ago, Notre Dame launched the Trusted AI project with collaborators at Indiana University, Purdue University, and the Naval Surface Warfare Center Crane Division (NSWC Crane).

In the time since the project launched, AI has undergone an important change. Not only has it developed technologically, but it has also become a matter of public interest. For example, since it launched less than a year ago, Open AI’s chatbot ChatGPT has gained over 100 million users, and six-in-ten U.S. adults say they know about the technology.

According to Charles Vardeman, a computational scientist and research assistant professor at the University of Notre Dame’s Center for Research Computing, the buzz surrounding generative artificial intelligence (AI) can sometimes draw attention away from a crucial element that determines whether we can trust AI or not: data.

“In AI development, much of the attention is given to fine-tuning model architectures, experimenting with different layers, activation functions, and optimization algorithms,” Vardeman explains. “While this approach has driven many innovations, it may sometimes overlook the central role of data in AI success.”

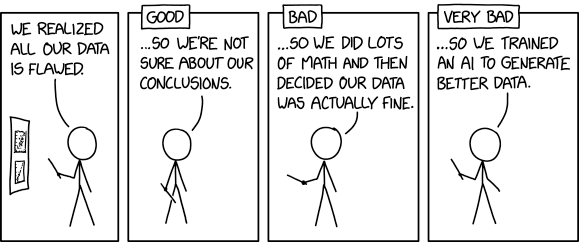

Vardeman illustrates the problem with a cartoon called “Flawed Data” by XKCD:

For Vardeman, the message is simple: Trusted AI needs trusted data, and “irrespective of their complexity, models are only as good as the data they learn from.”

Vardeman leads the Frameworks Project, a part of the larger Trusted AI project that provides research, methodologies, and tools to enable trusted and ethical AI. He says he and his collaborators find inspiration in the Data Centric AI movement, championed by tech leaders like Andrew Ng and others. The movement emphasizes the importance of tools and practices that systematically improve the data used to build an AI system.

“By prioritizing data quality, encompassing aspects such as cleanliness, relevance, diversity, and contextual richness, AI systems can achieve better performance with potentially simpler architectures,” Vardeman says. He points out that the shift toward a data-centric approach is “not just a technical reorientation; it’s a recalibration of AI development’s very essence, placing data at the heart of the innovation process.”

According to Vardeman, a data-centric approach aligns well with the military’s requirements.

“In complex environments where variability and uncertainty are common, the depth and quality of data can make a significant difference,” he explains. “A well-annotated dataset that captures the intricacies of real-world scenarios allows AI models to learn more effectively and generalize better to unseen situations.”

The Frameworks Project commits to data-centric goals that align with the Department of Defense’s data goals and strategies. It focuses on making data:

- Visible: Ensuring that data is discoverable by those who need it fosters transparency and helps build a foundation of trust in AI systems.

- Accessible: Making data readily available to authorized users enhances the efficiency and effectiveness of AI, providing the right information at the right time.

- Understandable: Clear documentation and metadata contribute to the explainability of AI, a core dimension of trust.

- Linked: Connecting related data sets facilitates more coherent AI analysis, ensuring robustness and reliability in decision-making.

- Trustworthy: Maintaining the integrity and quality of data ensures that AI systems are dependable, echoing the Trustworthy dimension in our trust framework.

- Interoperable: Enabling data to be used across different systems and platforms fosters collaboration and integration, key aspects of our community engagement efforts.

- Secure: Implementing strong data security measures safeguards privacy and aligns with the ethical considerations central to trusted AI.

Vardeman says that by recognizing data as a strategic asset and prioritizing these seven goals, the Frameworks Project is “nurturing an environment where trusted AI can flourish.”

As the Frameworks Project enters its third year, it will continue to emphasize data quality, Vardeman says, and by leveraging the momentum of the data-centric AI movement, the next phase of the project will focus on further refining data quality and context.

For the Trusted AI (TAI) Frameworks Project, a data-centric philosophy serves as an essential cornerstone, recognizing that robust data management practices can lead to more reliable, interpretable, and trusted AI systems. This perspective does not diminish the value of innovative modeling but places it in the context of a balanced and well-considered AI development strategy, where data and models work in harmony.

Vardeman says, “The data-driven journey of the Trusted AI Frameworks Project illuminates the vital role of data in enhancing the trustworthiness and success of AI deployment within the Navy and Marine Corps. With a vision rooted in collaboration, ethical excellence, and technological innovation, the path forward promises to be an exciting and transformative adventure.”

“Together,” he says, “academia, industry, and the military will continue to forge a data-centric future that not only serves the needs of the present but anticipates the challenges and opportunities of tomorrow.”

Contact

Brett Beasley / Writer and Editorial Program Manager

Notre Dame Research / University of Notre Dame

bbeasle1@nd.edu / +1 574-631-8183

research.nd.edu / @UNDResearch

About the Center for Research Computing

The Center for Research Computing (CRC) at the University of Notre Dame is an innovative and multidisciplinary research environment that supports collaboration to facilitate multidisciplinary discoveries through advanced computation, software engineering, artificial intelligence, and other digital research tools. The Center enhances the University’s innovative applications of cyberinfrastructure, provides support for interdisciplinary research and education, and conducts computational research. Learn more at crc.nd.edu.

About Notre Dame Research

The University of Notre Dame is a private research and teaching university inspired by its Catholic mission. Located in South Bend, Indiana, its researchers are advancing human understanding through research, scholarship, education, and creative endeavor in order to be a repository for knowledge and a powerful means for doing good in the world. For more information, please see research.nd.edu or @UNDResearch.